Watch this related video:

Artificial intelligence (AI) has become an integral part of our daily lives, from the algorithms that power our social media feeds to the voice assistants that respond to our every command. But as technology continues to advance, one question looms large: Who does AI really work for?

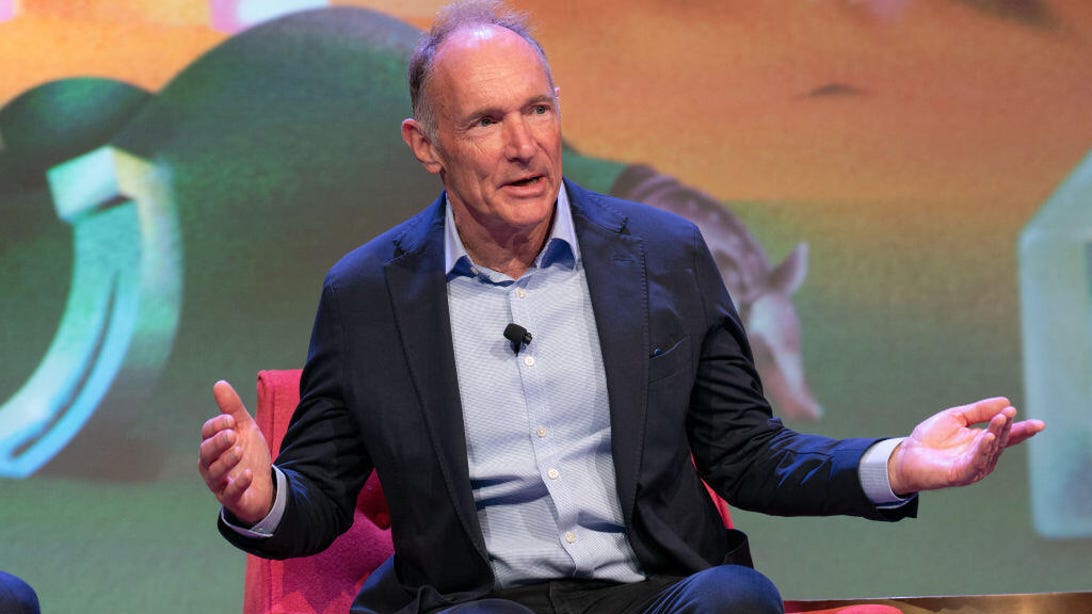

This was the very question posed by Tim Berners-Lee, the inventor of the World Wide Web, at the recent TED conference. He highlighted the growing concerns about the ethical implications of AI and urged for more transparency and accountability from the tech industry.

But before we dive into the debate, let’s first understand what AI is and how it works. In simple terms, AI is the simulation of human intelligence processes by machines, including learning, reasoning, and self-correction. It has the potential to revolutionize various industries, from healthcare to finance, but it also comes with its own set of ethical challenges.

One of the primary concerns is the lack of diversity in AI development. Research has shown that AI algorithms can inherit the same biases and prejudices as their creators. This could lead to discriminatory outcomes and reinforce existing social inequalities. As AI becomes increasingly ingrained in our society, it’s essential to address this issue to ensure fairness and justice for all.

Another ethical dilemma surrounding AI is the issue of data privacy. As AI relies heavily on data to learn and make decisions, there are concerns about how this data is collected, used, and protected. The Cambridge Analytica scandal and the recent data breaches at major tech companies have only heightened these concerns. We must find a balance between utilizing data for AI development while respecting individuals’ privacy and rights.

Furthermore, there is a growing fear of AI replacing human jobs. While automation can increase efficiency and productivity, it also raises concerns about job displacement and the need for upskilling to keep up with the evolving job market. It’s crucial for companies and governments to prioritize retraining and reskilling programs to prepare the workforce for the AI-driven future.

So, who should be held accountable for addressing these ethical issues surrounding AI? The responsibility falls on all stakeholders, including governments, technology companies, and individuals. Governments must establish regulatory frameworks and ethical standards to guide AI development and usage. Tech companies must practice transparency and diversity in their AI development processes. And individuals must educate themselves about AI and demand accountability from the companies they use.

In the end, the future of AI depends on how we choose to regulate and use it. While it has the potential to bring about positive change, we must ensure that it serves everyone and not just a select few. As Tim Berners-Lee said, “We need to think about what we want to use AI for, what we want it to be, and how we want it to serve humanity.” And it’s up to all of us to shape the future of AI in a responsible and ethical manner.

So, the next time you interact with AI, remember to ask yourself, “Who does this AI work for?” Let’s make sure the answer is for the benefit of all.